Diffusion KPIs: A Guide to the Quantitative Metrics of Diffusion Models (DDMs) - Part 2/2

How do you quantify what diffusion models learned?

Table of Content

Introduction

CLIP-Based Metrics

CLIP Score.

CLIP Directional Score.

Inception-Based Metrics

Fréchet Inception Distance (FID).

Kernel Inception Distance (KID).

Inception Score (IS).

Reconstruction Quality Metrics

Peak Signal-to-Noise Ratio (PSNR).

Structural Similarity Index (SSIM).

Precision and Recall

Recap

References

1. Introduction

In our last post, we discussed the qualitative metrics for evaluating diffusion models. We explored different benchmark datasets, and we learned the evaluation process step by step. Also, we knew that quantitative metrics are just a measurement of a qualitative aspect or a concept.

Today, we will cover different quantitative metrics in detail, and explain when, and how to use them in evaluating diffusion models. In the beginning, we will discuss CLIP-based metrics, in which the CLIP model is used as a backbone for evaluating the generated samples of diffusion models. Then, we will explore the inception-based metrics in which the inception model is utilized for the evaluation process.

After that, we will discuss another family of metrics, the reconstruction quality metrics, in which we try to measure the noise ratio between an original signal (i.e. image) and its compressed version. Finally, we will end this post by covering classical machine-learning metrics, precision, and recall.

So, let’s get started!

3. CLIP-Based Metrics

The Contrastive Language-Image Pre-training model (CLIP) is one of the state-of-the-art Visio-lingual models. CLIP is trained on two different tasks to learn efficient image representations, where the result of these two tasks is a model that can learn to classify images based on text description:

Contrastive Pre-training Task: CLIP is trained on text-image pair datasets, where the model tries to associate captions with images, in other words, it tries to learn which image goes with which text or caption.

Zero-Shot Transfer Task: CLIP is used for zero-shot transfer tasks to generalize to unseen datasets. Then these results over different datasets are cached for future training.

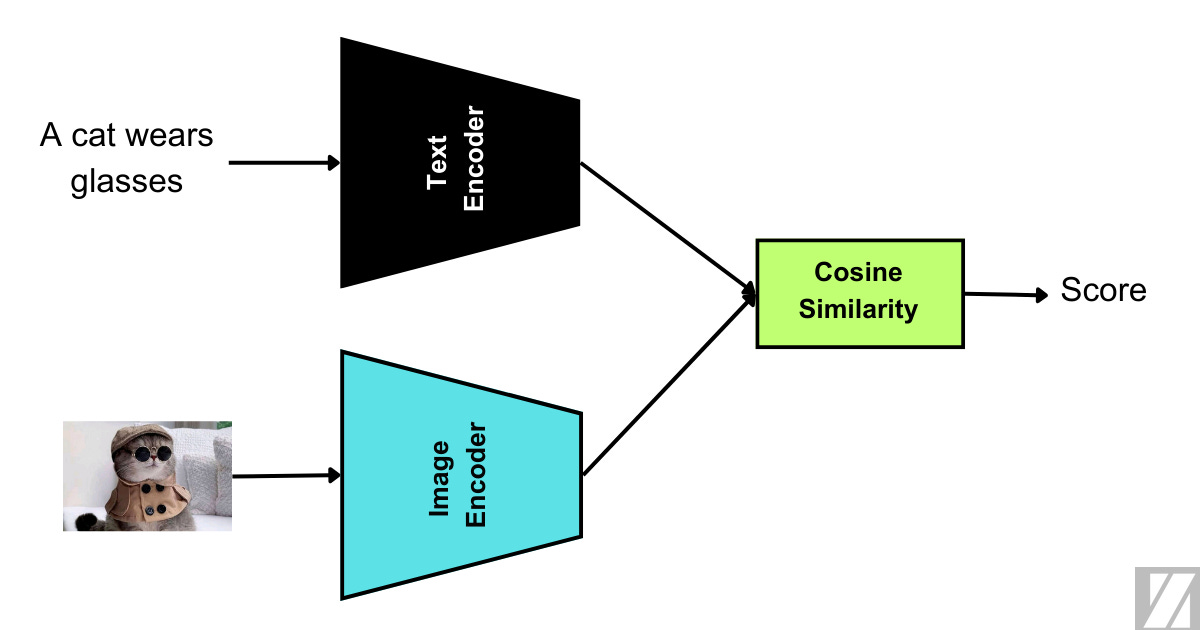

CLIP consists of two sub-models that allow it to operate over images and text descriptions.

Text Encoder: This learns to map texts to embedding representations.

Image Encoder: This learns to map images to embedding representations.

Leveraging the cosine similarity metric, we can measure the similarity between the text and image in the embedding space. According to the CLIP paper:

When interpreted this way, the image encoder is the computer vision backbone which computes a feature representation for the image and the text encoder is a hypernetwork (Ha et al., 2016) which generates the weights of a linear classifier based on the text specifying the visual concepts that the classes represent.” (Radford et al., 2021, p. 6)

Finally, The metrics in this family use the cosine similarity function under the hood to compute the similarity score.

3.1 CLIP Score

3.1.1 What is the CLIP score?

Now, we know that we have a model that can help us to measure the similarity between text and corresponding image, which is called the CLIP score. CLIP score is a metric that is used to measure the similarity or compatibility between textual descriptions and model-generated images.

3.1.2 When is the CLIP score used?

CLIP score is usually used as a metric in text-to-image generation tasks.

3.1.3 Metric-Performance Relationship

A high CLIP Score indicates that the generated content is semantically relevant to the given text prompts. It suggests that the generative model can effectively align its output with textual descriptions.

A low CLIP Score suggests a lack of alignment between the generated content and text prompts, indicating that the generative model may struggle to produce textually relevant images.

3.1.4 How to use CLIP score?

The easiest way to evaluate your diffusion model or any other deep learning model is to use the TorchMetrics library. TorchMetrics is a collection of PyTorch metrics implementations that you can use to evaluate your deep learning models. However, we should keep in mind that this is still a library written by the PyTorch framework, so your models should be also developed in PyTorch.

import torch

from torchmetrics.multimodal.clip_score import CLIPScore

image = torch.randint(255, (3, 224, 224), generator=torch.manual_seed(42))

text = "a photo of a leaf"

metric = CLIPScore(model_name_or_path="openai/clip-vit-base-patch16")

score = metric(image, text)

print(score.detach())3.2 CLIP Directional Score

Image editing is one of the hottest applications of generative modeling. In this task, we’re given an image and a text instruction, and we need to modify that image according to the given instruction.

3.2.1 What is the CLIP directional score?

The standard CLIP score can’t measure directly diffusion models that perform this type of task, because we have 4 inputs in this case:

An image that needs to be modified.

A text description for the previous image.

The editing instructions.

The modified image.

A smart way to work around this setting is to measure the CLIP score of the change between images and text in the embedding space. That means the CLIP score will be high when the embedding representation of the change between texts and images is small.

3.2.2 When is the CLIP directional score used?

The CLIP directional score is usually used as a metric in guided-image editing tasks (i.e. text-image editing, draw-image editing, …etc.).

3.2.3 Metric-Performance Relationship

A high CLIP Directional Score indicates that the generated content has a strong alignment with a specific direction in the CLIP embedding space. This implies that the generative model can generate images that exhibit desired characteristics along that direction.

A low CLIP Directional Score suggests that the generative model does not effectively control the desired characteristics specified by the direction in the CLIP embedding space.

3.2.4 How to use the CLIP directional score?

Here is a brief code explaining the implementation of the above metric:

import torch

from torchmetrics.multimodal.clip_score import CLIPScore

seed = 42

image1 = torch.randint(255, (3, 224, 224), generator=torch.manual_seed(seed))

image2 = torch.randint(255, (3, 224, 224), generator=torch.manual_seed(seed))

description = "a sunny sky"

instruction = text = "a cloudy sky"

metric = CLIPScore(model_name_or_path="openai/clip-vit-base-patch16")

score = metric(image1 - image2, text1 - text2)

print(score.detach())4. Inception-Based Metrics

Our second family of metrics is built on a popular deep-learning architecture, inception. This family consists of the following metrics:

Inception Score (IS)

Fréchet Inception Distance (FID)

Kernel Inception Distance (NID)

4.1 Inception model

4.1.1 What is Inception?

Inception model is a deep learning architecture that is used in computer vision tasks like object detection and classification. It was introduced by Szegedy et al. and it is trained on the ImageNet dataset, so it is able to classify an image as one of 1000 objects.

The model consists of different building layer blocks to get the final prediction. It uses a set of convolutional layers to learn spatial information, pooling and batch normalization layers are also used to speed up and stabilize the network inference process.

Inception has different versions, but the one that is currently used in the following metrics is Inception v3, in which the fully connected layer of the auxiliary classifier is normalized using batch normalization. This addition helps in stabilizing the training process and improving the overall performance of the model.

4.1.1 Inception Output

The output from the inception model is a probability over the 1K class of objects presented in the ImageNet dataset.

However, for most of the next set of metrics we don’t use this final output layer, and we utilize the last pooling layer before the output layer which has a size of 2048 units to calculate our scores.

4.2 Inception Score (IS)

Inception score is one of the popular metrics used to evaluate the performance of GAN models. Usually, it is used to evaluate generated images by our generative models.

Given the generated image samples from our generative model, It tries to measure two aspects:

Quality: how real are the generated images?

Diversity: how diverse are the generated images?

4.2.1 How does it work?

The inception score (IS) uses the output of the final layer of the inception model to measure the quality and diversity of generated images. It uses 50,000 samples to calculate the score because it makes the score statistically significant.

To measure the quality of generated images, the inception score tries to define image quality by the number of classified or recognized objects in that image using our inception model. So, a high-quality image has a high classification score by our inception model, and vice versa. Passing an image in the inception model, we will get a probability vector that can be visualized as a histogram distribution, which is called a “focused distribution”. The term “focused” came from the fact that in the ideal scenario, this distribution will always have a recognized peak. This peak represents the probability of the detected or classified image. Here is a figure that shows the focused distribution:

Then, in the ideal case, if we run the images that belong to each class through our inception model, and sum these score vectors, we will have a similar shape to the above distribution:

To measure the diversity of the generated images, diversity is defined by how much entropy an image has. In other words, an image with high diversity will have a uniform score distribution, because a generated image will have so many objects in it, and it would be hard for the inception model to recognize a single object. Here is a figure explaining that:

Then, in the ideal scenario, if we pass all our 50K images through the inception model, and then sum the score vectors for images that don’t belong to the same class, we will have the same uniform distribution. This uniform distribution is called marginal distribution. Check the next image:

Let’s define the focused and the marginal distribution as follows:

Given this, the inception score (IS) is calculated the following formula:

Inception score (IS) measures the difference between the focused probability and the marginal probability. A high IS score means that the difference between these distributions is big, which means the diversity and quality in these generated images are high, and vice versa.

4.2.2 Metric-Performance Relationship

A high Inception Score suggests that the generated images exhibit diversity and high-classification accuracy when evaluated by an Inception classifier.

A low Inception Score may indicate that the generated images lack diversity or are not easily distinguishable by the classifier.

4.2.3 How to implement it?

Using the TorchMetrics library, we can implement the inception score (IS) score as follows:

from torchmetrics.image.inception import InceptionScore

import torch

seed = torch.manual_seed(123)

# generate some random images

imges = torch.randint(0, 255, (100, 3, 299, 299), generator=torch.manual_seed(seed), dtype=torch.uint8)

inception = InceptionScore()

inception.update(imges)

inception.compute()4.3.4 Limitations

Inception score (IS) has a lot of challenges that prevent it from being used in all generative tasks. Here is why:

Data Limited: The inception score is limited by the data used during the training of the inception model. That means the Inception score fails when it comes to measuring generated images that the Inception model never saw during the training step or if the generated images have different class labels from the original training data used during the Inception training.

Overfitting Problem: The inception score fails when the generator model (i.e. diffusion models) memorizes the training data and generates it. It can’t cope and adjust to the overfitting problem.

Intra-Class Diversity: The inception score can’t handle intra-class diversity. This means if you have repetitive images from the same class, IS will always give you a high score.

Ground-truth Data: IS doesn’t include the ground-truth data in the measurement process. It only uses the statistics of the generated data to compute the score.

4.3 Fréchet Inception Distance (FID)

Due to the previous limitations, several improvements have been made to the Inception score (IS) metric. One of those improvements is the Fréchet Inception Distance metric or FID. The Fréchet Inception Distance metric includes the ground truth data statistics during the measurement process, which helps in delivering a more reliable and domain-related score.

FID metric measures the following qualitative concepts:

Quality: how real are the generated images?

Diversity: how diverse are the generated images?

4.3.1 How does it work?

Similar to the inception score (IS), FID utilizes the inception model for the measurement, however, instead of using the final output layer values to calculate the score, we will use the values of the final pooling layer. This allows us to compute the statistics needed for the score measurement.

Here are the steps to compute the FID score:

First, we remove the final output layer of the inception model or ignore it.

Second, we pass our generated images to our truncated-inception model to get its latent representation from the pooling layer.

Third, we pass our ground-truth images to the truncated-inception model to get its latent representation from the pooling layer.

Fourth, we compute the mean and covariance matrix for both values of real and synthetic images.

Finally, we compute the FID score, using the next formula:

\(\begin{array}{IcI} FID &=& || \mu_p - \mu_q||^2 - Tr(\sum^2_p + \sum^2_q - 2\sqrt{\sum_p \sum_q}) \\ where: \\ \mu_p &=& \text{The mean of the real images features.} \\ \mu_q &=& \text{The mean of the generated images features.} \end{array}\)

That means FID tries to measure the mean distance between the real and synthetic images. As we can see, the use of both means helps FID to have a domain-aware property.

4.3.2 Metric-Performance Relationship

A low FID indicates that the generated images are very similar to the real images in terms of both distribution and visual quality.

A high FID suggests that the generated images differ significantly from the real images, either in terms of distribution or visual quality.

4.3.3 How to implement it?

With the TorchMetrics library, we can implement the Fréchet Inception Distance (FID) score as follows:

import torch

from torchmetrics.image.fid import FrechetInceptionDistance

seed = torch.manual_seed(123)

# Generate two slightly overlapping image intensity distributions.

imgs_dist1 = torch.randint(0, 200, (100, 3, 299, 299), dtype=torch.uint8)

imgs_dist2 = torch.randint(100, 255, (100, 3, 299, 299), dtype=torch.uint8)

fid = FrechetInceptionDistance(feature=64)

fid.update(imgs_dist1, real=True)

fid.update(imgs_dist2, real=False)

fid.compute()4.3.4 Limitations

Pretrained Model: FID depends on the inception model for the measurement process. This restricted it from measuring generated samples that resulted from models that don’t share the same training data.

Large Sample Size: To have a better score, FID needs at least 10,000 samples or images, which is computationally expensive.

Limited Statistics: Even though FID is much better than IS in leveraging ground-truth data statistics, they are still limited statistics. ( means and co-variances).

4.4 Kernel Inception Distance (KID)

Another interesting metric is the Kernel Inception Distance (KID). This metric is similar to the FID because it uses the same statistics that are used during the computation of the FID score (means and variances). However, it leverages an RBF function to capture complex similarity relationships in the images.

4.4.1 How does it work?

KID uses the Inception model to generate latent representations for real and synthetic images. It calculates the score through the following steps:

Latent Features: First, we use the inception model, the output of the final pooling layer, to get a latent representation of the real and generated images.

Kernel Features: Second, we compute the similarity score for both real and synthetic images using an RBF function as follows:

\(\begin{array}{IcI} k(\phi_p, \phi_p) &=& exp^{||\frac{\phi_p, - \phi_p}{2\sigma^2} ||^2} \\ k(\phi_q, \phi_q) &=& exp^{||\frac{\phi_q, - \phi_q}{2\sigma^2} ||^2} \\ \\ \textbf{where:} \\ k(\phi_p, \phi_p) &=& \text{The kernelized real image features.} \\ k(\phi_q, \phi_q) &=& \text{The kernelized synthetic image features.} \\ \phi_p &=& \text{The real image features using Inception model.} \\ \phi_q &=& \text{The synthetic image features using Inception model.} \\ \sigma &=& \text{The temperature parameter of the RBF function.} \end{array}\)Mean Calculation: Third, we calculate the mean of the kernelized features

\(\begin{array}{IcI} \mu_p &=& 1/n\sum^m_{i=1} k_i(\phi_p, \phi_p) \\ \mu_q &=&1/n \sum^m_{i=1} k_i(\phi_q, \phi_q) \\ \\ where: \\ \mu_p &=& \text{The mean of the kernelized features of real images.} \\ \mu_q &=& \text{The mean of the kernelized features of generated images.} \end{array}\)Score Calculation: Finally, we compute the Euclidean distance between the two means, which represents our KID score:

\(KID = || \mu_a - \mu_b||^2\)

4.4.2 Metric-Performance Relationship

A low KID indicates that the feature representations of generated and real images are highly similar in the chosen feature space. Lower KID values imply better similarity between the distributions of features.

A high KID suggests that there is substantial dissimilarity between the feature representations of generated and real images.

4.4.3 How to implement it?

TorchMetrics uses KernelInceptionDistance as an implementation of the KID metric.

import torch

from torchmetrics.image.kid import KernelInceptionDistance

seed = torch.manual_seed(123)

kid = KernelInceptionDistance(subset_size=50)

imgs_dist1 = torch.randint(0, 200, (100, 3, 299, 299), dtype=torch.uint8)

imgs_dist2 = torch.randint(100, 255, (100, 3, 299, 299), dtype=torch.uint8)

kid.update(imgs_dist1, real=True)

kid.update(imgs_dist2, real=False)

kid.compute()4.4.4 Limitations

KID experiences the same limitations that other metrics in this family encounter. Here are some of the limitations:

Pretrained Model: KID uses the inception model under the hood, which may affect its performance. That’s because the model used for the generation process may be trained using a dataset that differs from the Inception model datasets.

Large Sample Size: To have a better score, KID needs a large sample size which makes the computation expensive. Also, using a small sample size will lead to unstable results.

Hyperparameters Sensitivity: The KID score could be unreliable, as it is affected by the RBF temperature hyperparameter. How to deal with this hyperparameter is something that needs to be decided before using the KID metric.

Lack of Interpretability: The KID score doesn’t necessarily correlate with image quality, so sometimes it becomes misleading.

5. Reconstruction Quality Metrics

This family of metrics is popular in the telecommunication field, where a signal is dispatched by a sender and arrives at a receiver. The goal is to measure the quality of the signal on the receiver side, which is called signal reconstruction quality measurement.

5.1 Peak Signal-to-Noise Ratio (PSNR)

The Peak SIgnal-to-Noise Ratio is one of the popular metrics to assess the quality of reconstructed signals. It is used a lot to assess the quality of generated images by generative models and it’s measured in decibels (dB).

5.1.1 How does it work?

PSNR score is calculated using the following formula:

Note: The above formula is for calculating PSNR for a single real and generated image pair. So for calculating PSNR for a batch of images, you just need to compute that for each image indidually (or batch-wise).

The calculation of the PSNR score depends on two things:

Maximum Fluctuation Value (R2): This represents the maximum possible pixel value in an image. For an unsigned 8-bit image, the maximum value will be 255.

Mean Squared Error (MSE): The mean squared error between the generated image (received signal) and the real image (sent signal).

5.1.2 How to implement it?

Again, using TorchMetrics we can compute the PSNR score as follows:

from torchmetrics.image import PeakSignalNoiseRatio

psnr = PeakSignalNoiseRatio()

preds = torch.tensor([[0.0, 1.0], [2.0, 3.0]])

target = torch.tensor([[3.0, 2.0], [1.0, 0.0]])

psnr(preds, target)5.1.3 Metric-Performance Relationship

A high PSNR value indicates that the generated image is very close to the reference image in terms of pixel-wise similarity.

A low PSNR value implies that the generated image has significant pixel-wise differences compared to the reference image, indicating lower image quality.

5.1.4 Limitations

The PSNR metric suffers from the following limitations:

Computationally Expensive: PSNR processes images in the pixel format, which makes the computation expensive.

No Spatial Information: The computation of PSNR doesn’t address learning spatial information from the inputs, especially if it deals with images.

5.2 Structural Similarity Index (SSIM)

The Structural Similarity Index (SSIM) is used for assessing the structural similarity between two images. It is widely used in image and video processing to assess the quality of image compression, denoising, and super-resolution.

SSIM measures three things:

1. Luminance Comparison (L): It assesses the similarity in terms of image brightness.

2. Contrast Comparison (C): It measures the similarity in terms of contrast or texture.

3. Structure Comparison (S): This component evaluates the perceived similarity of the structural content between the reference and distorted images. It takes into account the spatial arrangement of pixels and edges.

The SSIM index is calculated as a combination of these three components and its value falls in the range between -1 and 1, where 1 indicates perfect similarity and -1 indicates complete dissimilarity.

5.2.1 How does it work?

5.2.2 How to implement it?

Let’s use Torchmetrics and leverage StructuralSimilarityIndexMeasure class to implement SSIM:

import torch

from torchmetrics.image import StructuralSimilarityIndexMeasure

generated_image = torch.rand([3, 3, 256, 256])

real_image= preds * 0.75

ssim = StructuralSimilarityIndexMeasure(data_range=1.0)

ssim(generated_image , real_image)5.2.3 Metric-Performance Relationship

A high SSIM value indicates that the generated image is very similar to the reference image in terms of structural and perceptual quality. Higher SSIM values suggest better image quality.

A low SSIM value suggests that there are structural and perceptual differences between the generated and reference images, indicating lower image quality.

5.2.4 Limitations

The Structural Similarity Index (SSIM) metric encounters the following limitations:

Noisy Images: SSIM deals poorly when it comes to noisy images.

Sensitivity to shifts and scaling: SSIM can’t handle shifts and scaling operations, as these operations try to change the underlying image structure.

6. Traditional Metrics

The last metrics family will be addressing a traditional set of metrics, precision, and recall. These metrics are used to assess specific aspects of generated images, For instance, we may want to see how good the generative model is at creating particular objects in the images it generates.

6.1 Precision

Precision is the ratio of the true positive values to the total positive values in the dataset.

6.2 Recall

Recall is the ratio of the true positive values to the actual positive values in the dataset.

6.3 Metric-Performance Relationship

For Precision:

A high value of precision means that when the classifier predicts a positive outcome, it is likely to be correct, minimizing false positive errors.

A low value of precision means that the classifier is prone to making false positive errors when predicting positive outcomes.

For Recall:

A high value of recall means that the classifier can identify most of the actual positive instances, minimizing false negative errors.

A low value of recall means that the classifier frequently misses actual positive cases, resulting in a higher rate of false negatives.

6.4 How to implement it?

TorchMetrics provides a way to compute the precision and recall in just one step:

from torchmetrics.functional import precision_recall

preds = torch.tensor([2, 0, 2, 1])

target = torch.tensor([1, 1, 2, 0])

precision_recall(preds, target, average='macro', num_classes=3)

precision_recall(preds, target, average='micro')7. Recap

In this post, we covered various quantitative metrics for evaluating diffusion models.

We discussed CLIP-based metrics, which use the CLIP model to measure the similarity between text and generated images.

CLIP Score measures compatibility between textual descriptions and model-generated images.

CLIP Directional Score assesses alignment with a specific direction in the CLIP embedding space.

Inception-based metrics include Fréchet Inception Distance (FID), Kernel Inception Distance (KID), and Inception Score (IS), which measure image quality and diversity.

Reconstruction quality metrics, such as Peak Signal-to-Noise Ratio (PSNR) and Structural Similarity Index (SSIM), assess image quality.

Precision and Recall are traditional metrics used to assess the generative model's ability to create specific objects in generated images.

8. References

Compute peak signal-to-noise ratio (PSNR) between images, by Mathworks, Mathworks.com.

A simple explanation of the Inception Score, by David Mack, Medium.

Evaluating Diffusion Models, by Hugging Face, HuggingFace.com.

inception score (IS), Stephen J. Bigelow, techtarget, techtarget.com.

How to evaluate GANs using Fréchet Inception Distance (FID), Ayush Thakur, Weights & Biases.

How to implement Fréchet Inception Distance (FID) for evaluating GANs, by Jason Brownlee, Machine Learning Mastery.

Fréchet Inception Distance (FID), George lawton, techtarget, techtarget.com.

Torchmetrics, torchmetrics.readthedocs.io.

Before Goodbye!

Want to Cite this Article?

@article{khamies2023quantitative,

title = "Diffusion KPIs: A Guide to the Quantitative Metrics of Diffusion Models (DDMs) - Part 2/2",

author = "Waleed Khamies",

journal = "Zitoon.ai",

year = "2023",

month = "Sept",

url = "https://publication.zitoon.ai/diffusion-KPIs-a-guide-to-the-quantitative-metrics-of-diffusion-models"

}New to this Series?

New to the “Generative Modeling Series”? Here you can find the previous articles in this series [link to the full series].

Any oversights in this post?

Please report them through this Feedback Form, we really appreciate that!

Thank you for your reading!

We appreciate your reading! If you would like to receive the following posts in this series in your email, please feel free to subscribe to the ZitoonAI Newsletter. Come and Join the Family!