From Noise to Meaning: Unraveling the Reverse Process of Diffusion Models (DDMs)

A Door For Going Back Through Time.

Table of Content

Introduction.

Notations.

Problem Definition.

A Quick Look to the Reverse Process System.

The Objective Function.

Architecture.

Recap.

References.

1. Introduction

In our last post, we addressed the forward process in denoising diffusion models(DDMs). We defined the forward process and provided a mathematical definition of it. Then, we took a brief detour to talk about an important technique that Bayesian statisticians use to deal with stochastic processes, the reparameterization technique. After that, we discussed two types of formalization of the forward process, one using the reparametrization technique, and the other abstaining from it.

Also, we derived the forward process equation and explained the math behind it in detail. Finally, we talked about the diffusion schedule and explained how much it can help us to calculate the forward process in a closed-form setting in one single operation without needing to go over all the steps.

Today, we will address the opposite twin of the forward process, the reverse process. This process will help us to obtain our meaningful input sample back from absolutely nothing, a noise. First, we will define the reverse process problem, and then we will go quickly over the working flow of the reverse process of recovering our input sample.

Third, we will delve into the objective function that guides the reverse process model to uncover the noise, and finally, we will explain the main components of the reverse process model as mentioned in the original paper. So, without further ado, let’s get started!

2. Notations

3. Problem Definition

3.1 Definition

The reverse process is:

The process of removing noise gradually from a noisy sample over many steps, until the noisy sample is converted back to its original clean form.

In other words, the goal of the forward process is to find a function that can be able to recreate a true sample from a noisy sample by an iterative denoising process.

3.2 In Math

Let’s assume that we have a data point x that is sampled from a distribution d and we want to perturb it by adding a noise ε sampled from a Gaussian distribution N(0, I) over T steps.

From the previous post where we discussed the forward process of diffusion models, we learned that the forward process equation has the following form:

which we derived its closed form as follows:

4. The Reverse Process System

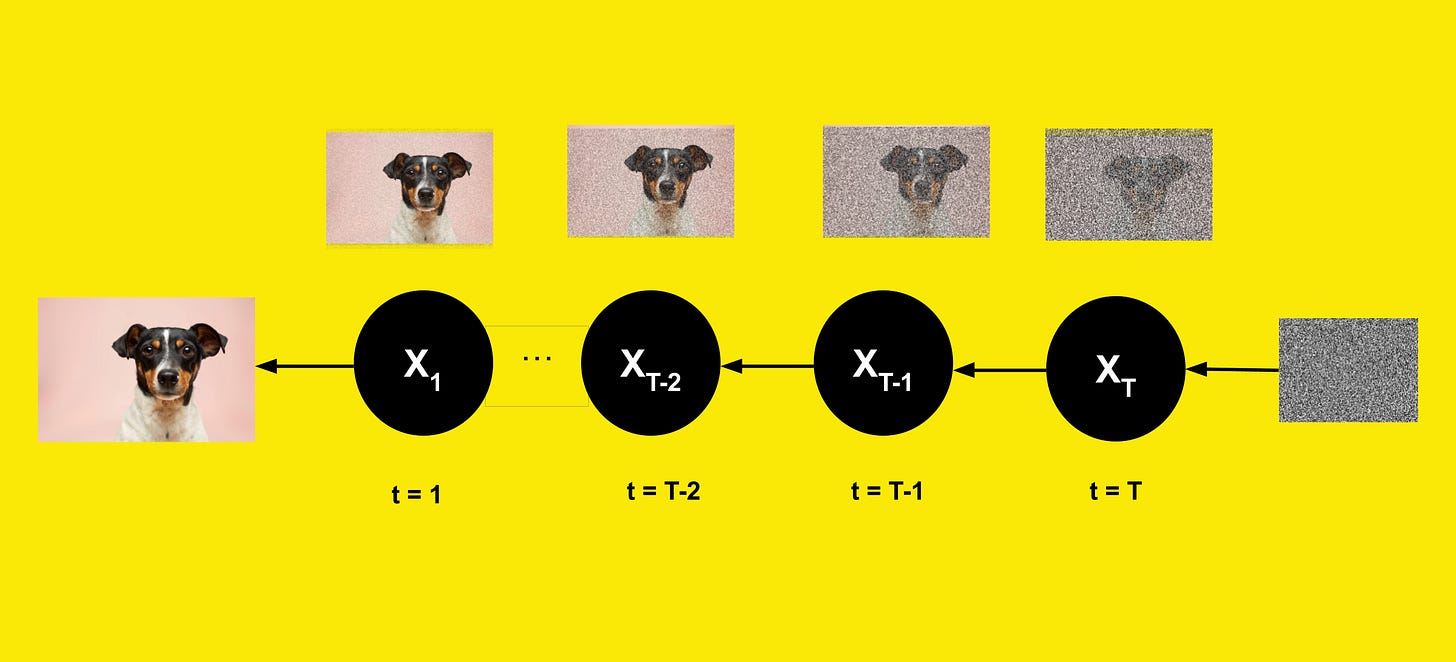

In the reverse process, we want to model the reverse relationship of x through time as follows:

Unfortunately, we can not model this distribution, because it requires us to use the whole dataset for a single estimation. Let’s explain this more, the above distribution tries to generate a sample from the past given a sample from the present. To do that, you need to have access to the entire dataset or the present + the future to be able to do that.

As an example, if you want to predict what you ate yesterday given what eat today, it will require you to know all the information about today, and not just a meal of today (i.e. your mood, cooking techniques, seasonings, …etc.). This is different from predicting what you will eat today based on yesterday, as in this case, you will just need the information about what you just ate that day.

In fact, the words “predict” and “future” can’t be combined together in the Euclidean space but it may happen in quantum physics and quantum mechanics. Let’s move from this debatable topic, and cut it short for now: "We can’t predict the past given the present”.

Is this the end of the tunnel, What is the solution then?

We can learn an auxiliary approximate function p that can help us to sample a past sample given a current sample, but be careful, that doesn’t mean that function is modeling the past-future relationship:

Here is a visual illustration of the reverse process:

5. The Objective Function

The objective function for the diffusion model is a simple MSE loss between the total noise that has been added till the current step t by the forward process and the predicted noise by a neural network.

The derivation of this loss will be considered for another post, but here is a simple explanation to give some sense.

Mathematically, we defined the reverse process as follows:

As we can see, the reverse process function has learnable parameters, a mean (μ), and a variance (Σ). These parameters are defined as:

The goal is to make the approximated reverse process function (distribution) p as closely as the real reverse process function (distribution) q by optimizing the following variational lower bound on negative log-likelihood loss:

If we do some calculation, we can find that this mean difference represents this reverse objective function:

6. Architecture

We can notice the diffusion objective function has a learnable component:

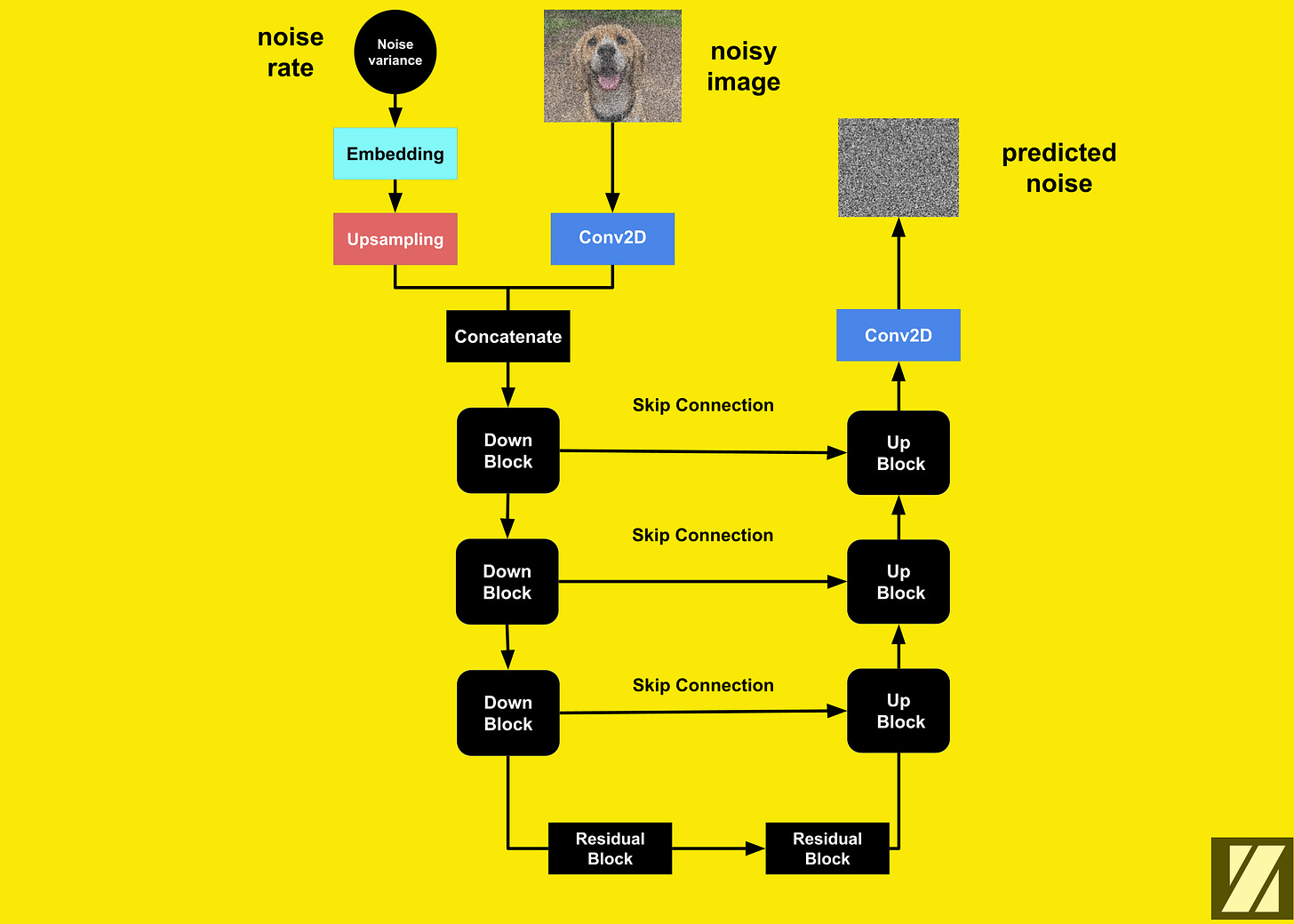

[Ho et al.] defined this as a UNet model that predicts the total amount of noise that is added to an input sample given a noisy image and a noise rate.

As we mentioned in this post, The UNet architecture is a popular architecture in computer vision for image segmentation tasks. It consists of the following components:

Upsampling Block: To increase the spatial dimension of the noise variance vector.

Convolution Block: To increase the number of channels of the input sample.

DownBlock: To compress the input sample spatially and expand channel-wise.

UpBlock: To expand the input sample in the spatial dimension while reducing the channel dimension.

Residual Block: To mitigate the vanishing gradient problem during the training process.

Skip Connection Block: To allow the information to shortcut parts of the network and flow through to later layers.

How Does It Work?

Input/Output (I/O): The input to the UNet model is the noise rate at time step t and the noisy image. The model will predict the total amount of noise that is added to an input sample.

In the beginning, we will change the representation of the noise rate and the noisy image using a sinusoidal embedding layer and a convolution layer respectively.

After that, we concatenate the output from the previous step and send them to a downsampling block which compresses the input sample spatially and expands it channel-wise.

Then, the output from the downsampling block is processed by a residual block which does not change the spatial or the channel dimensions. Rresidual layers help stabilize the network during the training process and avoid the vanishing gradient problem.

Before the final prediction, our output from the last stage is upsampled to increase their spatial dimension and reduce the number of channels.

Then, we send the result to another convolutional layer to reduce the number of channels to 3.

Your Takeaway From This Post!

The standard loss function for diffusion models is:

7. Recap

In this post, we addressed the following:

We've shifted our focus to the reverse process, where we're focusing on the challenge of making sense out of noise.

We began by defining the problem—how to recover meaningful data from noise—and provided an overview of how it works.

We then explored the diffusion objective function, which guides our reverse process in uncovering hidden noise patterns.

One of the significant challenges we encountered was the need for access to the entire dataset for a single estimation, which can be impractical.

We noted that predicting the past from the present isn't straightforward in our usual world (Euclidean space), but it's an intriguing concept in quantum physics.

To address this challenge, we introduced a practical solution: an auxiliary function labeled "p" that helps in sampling a past state based on the current one.

The objective function quantifies the differences between added noise and the neural network's predictions.

Finally, we explored the UNet model, consisting of various components and blocks working together to predict cumulative noise.

8. References

Jonathan Ho, Ajay Jain, and Pieter Abbeel. "Denoising Diffusion Probabilistic Models" (2020).

Alex Nichol and Prafulla Dhariwal. "Improved Denoising Diffusion Probabilistic Models" (2021).

David Foster's "Generative Deep Learning, 2nd Edition" (2023).

Lilian Weng. "What are Diffusion Models?" (2021). Lilian Weng's Blog

Before Goodbye!

Want to Cite this Article?

@article{khamies2023noise,

title = "From Noise to Meaning: Unraveling the Reverse Process of Diffusion Models (DDMs)",

author = "Waleed Khamies",

journal = "Zitoon.ai",

year = "2023",

month = "Sept",

url = "https://publication.zitoon.ai/from-noise-to-meaning-unraveling-the-reverse-process-of-diffusion-models"

}New to this Series?

New to the “Generative Modeling Series”? Here you can find the previous articles in this series [link to the full series].

Any oversights in this post?

Please report them through this Feedback Form, we really appreciate that!

Thank you for your reading!

Thank you for your reading! If you would like to receive the next posts in this series in your email, please feel free to subscribe to the ZitoonAI Newsletter. Come and Join the Family!