Optimizing Diffusion: Techniques to Speed up Diffusion Models (DDMs)

How to speed up the work of diffusion models?

Table of Content

Introduction

The problem with standard diffusion architecture.

Speed Optimization: Training-Free Approach vs. Training-Based Approach.

Training-Free Approach

Stride Sampling.

Parallel Sampling.

Training-Based Approach

Pruning Technique

Quantization Technique

Sparsifying Technique

Denoising Diffusion Implicit Models (DDIMs).

Recap

1. Introduction

In our last post, we learned how we can generate a new sample from diffusion models (DDMs). We presented the main sampling equation of diffusion models and explained each term. Then we translated that equation into a functional algorithm and visualized each step of that algorithm. After that, we showed why that sampling algorithm doesn't work in practice, and we introduced a simple technique that helps in speeding up this sampling process.

Today, we will dive deep into diffusion model optimization by discussing three main techniques that are used to accelerate the learning process of diffusion models. In the beginning, we will discuss briefly why diffusion models don't work properly in practice. Then we will explain two main categories to optimize diffusion models. Finally, we will go over a popular training-free approach used in practice to speed up diffusion models and make them ready for production. Let's get started!

2. Why Standard Diffusion Models (DDMs) Don’t Work?

Diffusion models are too slow in practice when it comes to generating a new sample, and that is because of two main reasons:

Many Steps: Diffusion models are trained over many steps (i.e. 5000 steps). Each step involves noise prediction using a neural network, and solving a Markov equation.

Sequential Execution: The processing of data by diffusion models follows a sequential order, where each time step depends on the previous one. This type of process is known as a Markov process, which states that:

The information in a given state only depends on the previous state.

However, this causes a bottleneck in the learning of diffusion models, as each current state waits for the previous state to finish its computation before it starts.

3. Speed Optimization: Training-Free Approach vs. Training-Based Approach

There are two main broad categories to optimize diffusion models:

Training-Free Approach: This approach tries to optimize diffusion models by reducing the number of diffusion steps.

Training-Based Approach: This approach tries to achieve this optimization by modifying the architecture of diffusion models.

3.1 Training-Free Approach

The word "Free" comes from the fact that this class of methods is not incorporated during the training process, but they are only used in the inference stage. As we discussed in an earlier post, the learning process of diffusion models can be seen as an Ordinary Differential Equation (ODE) or Stochastic Differential Equation (SDE), and to optimize this equation we just need to find a better solver. Training-Free Approach can also be divided into two approaches:

3.1.1. Stride Sampling:

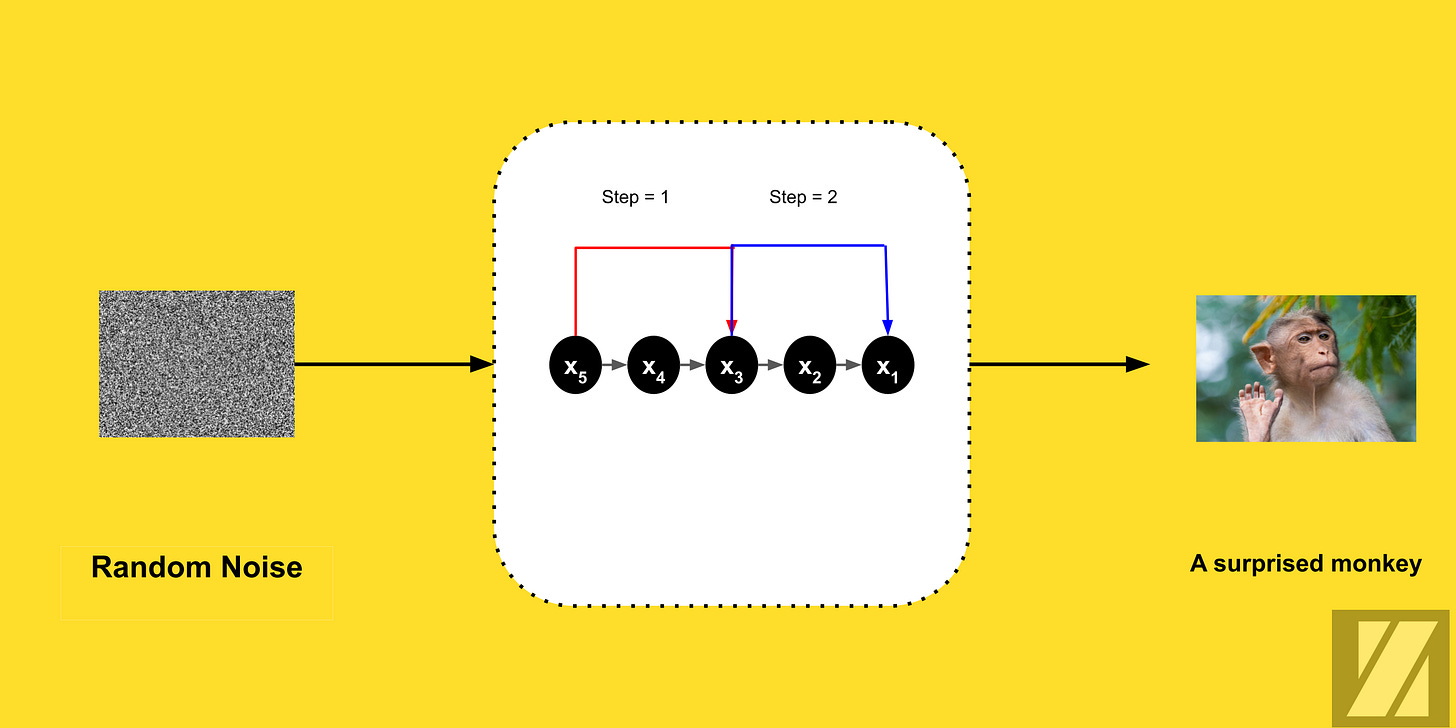

In this type of sampling, we aim to reduce the number of diffusion steps by selecting a subset of these steps. The stride sampling breaks the Markov assumption, where each state depends on the previous states. In this case, during the sampling process -the reverse process- it is not necessary that the assumption is true, as we could easily skip multiple steps to estimate a state.

Let's look at the next figure, As we can see during the inference or sampling process we started to go back through time, from t = 600 to t = 1. In this case, we didn't use the result at step 361 to estimate the noisy image at step 360, but we jumped directly from step t= 390 to t = 360, with step size = 30.

Examples of Stride Sampling Algorithms:

Denoising Diffusion Probabilistic Models (DDPMs).

Denoising Probabilistic Models (DDIMs).

DPM-Solver: Fast ODE Solver For DPM (DPM-Solver).

Note

The names of those algorithms are based on the papers names that they introduced in.

The stride algorithm is introduced as a part of the main algorithm that is discussed by the paper.

Why does stride sampling work?

In practice, they notice the performance of diffusion models is not degraded when the Markov assumption is broken. The refinement by the denoising algorithms is enough to produce a satisfactory result.

3.1.2. Parallel Sampling:

In this class of methods, the aim is to leverage the computation power of GPUs by parallelizing the work of diffusion models. Here are some examples of such methods:

Picard Iterations Methods: A popular non-linear approximation method used to solve differential equations. This class of methods can be parallelized using GPUs.

As an example, the ParaDiGMS algorithm accelerates the sampling of pretrained diffusion models by denoising multiple steps in parallel via the Picard iterations algorithm.

Normalizing Flow Methods: Normalizing flow is a class of generative models that transform simpler distributions into complex ones. They use a series of reversible, differentiable transformations, allowing for density estimation, generative modeling, and sampling.

RAD-TTS used normalizing flow in the mel-decoding process, where the use of normalizing flows and composition of invertible functions allows for parallel processing of mel-spectrogram frames, making the TTS system efficient and scalable.

3.2 Training-Based Approach

Our second approach of speeding up diffusion models aims to modify the architecture of the neural networks used in the diffusion process, whether by removing or adding new network blocks. Here are some popular techniques of the Training-Based Approach:

Pruning Technique: It tries to prune the network weights by reducing the number of weights in networks, which helps in increasing the inference speed.

Quantization Technique: This technique aims to speed up the inference for diffusion models by compressing the noise-estimation model size by reducing the precision of weights, biases, and activations. (float-32 to float-8) This helps speed up the operations of these models.

Sparsifying Technique: This method aims to maintain the noise-estimation model's sparsity dynamically during training. By using regularization terms along the loss function (i.e. L2 loss). It encourages the network to learn sparse representations by penalizing non-zero weights.

Model Distillation: Model Distillation or Knowledge Distillation is the most popular approach in speeding up the inference process of the neural network in diffusion models. It tries to distill the knowledge of the neural network used in the diffusion process through learning a simpler and smaller network. By reducing the model size, the inference time is also reduced.

A model can be distilled more than once. The process of distilling a neural network multiple times is called progressive distillation. The name of knowledge distillation is usually given to the process of distilling the network one time.

5. Denoising Diffusion Implicit Models (DDIMs)

DDIMs are one of the most popular approaches to speed up diffusion models. Belonging to the stride sampling family, this technique achieves a fast sampling generation through selecting a subset of the time-steps breaking the Markov assumption. DDIMs introduces two key changes to DDPMs architecture:

Implicit Generation: DDIMs remove the stochasticity from the sampling process. That means the same random noise will produce the same output every time it is processed by the DDIM algorithm.

Fast Sampling: DDIMs use stride sampling for the sample generation process. That means instead of denoising our noisy sample over T steps, we will define a step size 𝜏, where the step size is smaller than time-step T

(𝜏 < T).

5.1 Implicit Generation

The standard sample equation of diffusion models:

In the case of DDIMs, we set σ to zero (σ = 0), which results in the following sampling equation:

5.2 Fast Sampling

During the sampling operation, we have the complete freedom to denoise our random noise over all the T steps used during the training process or just use a subset of those steps.

DDIMs go one step further by breaking the Markov assumption and skipping some steps during the sampling process as follows:

Here is a brief code example that explains how different samplers work:

def sample_DDPM(sample, T, noise_estimation_model):

for i in range(T):

# .....

noise = noise_estimation_model(sample)

semi_clean_sample = denoise_DDPM(sample, noise, i)

sample = semi_clean_sample

# .....def sample_DDPM_v2(sample, m, noise_estimation_model):

for i in range(m):

# .....

noise = noise_estimation_model(sample)

semi_clean_sample = denoise_DDPM(sample, noise, i)

sample = semi_clean_sample

# .....def sample_DDIM(sample, T, skip_factor, noise_estimation_model):

m = skip_factor

step_size = T/m

for i in range(T, -1, step_size):

# .....

noise = noise_estimation_model(sample)

semi_clean_sample = denoise_DDIM(sample, noise, i)

sample = semi_clean_sample

# .....8. Recap

In this article, we delved into techniques for speeding up diffusion models, making them more efficient for practical applications.

We identified challenges faced by standard diffusion models, including their slow processing due to a high number of steps and sequential execution.

We discussed two main categories for optimizing diffusion models:

In the Training-Free Approach, we explored methods like Stride Sampling and Parallel Sampling, aimed at reducing steps and parallelizing computations.

In the Training-Based Approach, we discussed techniques such as Pruning, Quantization, Sparsifying, and Model Distillation, focusing on modifying model architecture.

We introduced Denoising Diffusion Implicit Models (DDIMs), a popular approach for expediting diffusion models.

We highlighted two key features of DDIMs: Implicit Generation and Fast Sampling, which contribute to more efficient sample generation.

9. References

Jonathan Ho, Ajay Jain, and Pieter Abbeel. "Denoising Diffusion Probabilistic Models" (2020).

Alex Nichol and Prafulla Dhariwal. "Improved Denoising Diffusion Probabilistic Models" (2021).

David Foster's "Generative Deep Learning, 2nd Edition" (2023).

Weng, Lilian. What are diffusion models? Lil’Log. https://lilianweng.github.io/posts/2021-07-11-diffusion-models/ (Jul 2021).

Chen, Y.-H., Sarokin, R., Lee, J., Tang, J., Chang, C.-L., Kulik, A., & Grundmann, M. "Speed Is All You Need: On-Device Acceleration of Large Diffusion Models via GPU-Aware Optimizations" (2023). ArXiv preprint 2304.11267.

Chen, G. "Speed up the inference of diffusion models via shortcut MCMC sampling" (2022). ArXiv preprint 2301.01206.

Wang, Z., Jiang, Y., Zheng, H., Wang, P., He, P., Wang, Z., Chen, W., & Zhou, M. "Patch Diffusion: Faster and More Data-Efficient Training of Diffusion Models" (2023.

Shih, K. J., Valle, R., Badlani, R., Lancucki, A., Ping, W., & Catanzaro, B. "RAD-TTS: Parallel Flow-Based TTS with Robust Alignment Learning and Diverse Synthesis" (2021). ICML Workshop on Invertible Neural Networks, Normalizing Flows, and Explicit Likelihood Models.

Shih, A., Belkhale, S., Ermon, S., Sadigh, D., & Anari, N. "Parallel Sampling of Diffusion Models" (2023). ArXiv preprint 2305.16317.

Before Goodbye!

Want to Cite this Article?

@article{khamies2023optimizing,

title = "Optimizing Diffusion: Techniques to Speed up Diffusion Models",

author = "Waleed Khamies",

journal = "Zitoon.ai",

year = "2023",

month = "Sept",

url = "https://publication.zitoon.ai/optimizing-diffusion-techniques-to-speed-up-diffusion-models"

}New to this Series?

New to the “Generative Modeling Series”? Here you can find the previous articles in this series [link to the full series].

Any oversights in this post?

Please report them through this Feedback Form, we really appreciate that!

Thank you for your reading!

We appreciate your reading! If you would like to receive the following posts in this series in your email, please feel free to subscribe to the ZitoonAI Newsletter. Come and Join the Family!